On Thursday 18th February we looked at the latest hot thing: ChatGPT and put it through its paces. The site was pretty busy (100m users as of January) but we were lucky enough to get a slot for the presentation. https://chat.openai.com/auth/login

All you need to get an account is an e-mail address and password.

First: Some background information:

ChatGPT (Chat Generative Pre-trained Transformer) is a chatbot developed by OpenAI https://openai.com and launched in November 2022. It is built on top of OpenAI’s GPT-3 family of large language models LLM (Given a bit of a sentence it guesses what word probably comes next, but it does this considering lots of variables, creating context). Open AI also develops Dall.E (AI art) and Whisper (AI speech recognition) . It has received a huge injection of cash ($11bn) from Microsoft.

Although the core function of a chatbot is to mimic human conversation, ChatGPT is versatile. For example, it can write and debug computer programs, compose music, teleplays, fairy tales, and student essays; answer test questions (sometimes, depending on the test, at a level above the average human test-taker); write poetry and song lyrics; emulate a Linux system; simulate an entire chat room; play games like tic-tac-toe; and simulate an ATM. ChatGPT’s training data includes “man pages” (software documentation found on UNIX systems) and information about Internet phenomena and programming languages, (from Wikipedia)

- It attempts to reduce “harmful” and “deceitful” responses…

- It remembers previous responses and prompts

- It can talk to itself! e.g. here’s a conversation between GPT avatars (GPT 3) – so who needs people?? https://youtu.be/jz78fSnBG0s

Humans were involved in training it, and current users continue to enable the company to gather data on how well the app is performing. To work, GPT needs to be “trained” on a large amount of text. For example, the GPT-3 model was trained on a text set that included over 8 million documents and over 10 billion words. From this text, the model learns to perform natural language processing tasks and generate coherent, well-written text.

Most importantly, its training included Reinforcement learning based on human feedback. Supervised fine-tuning was also done by human trainers who provided conversations in which they represented both the user and the AI assistant.

This training by humans sets ChatGPTapart from other, similar AI Chat bots (of which there are many!)

For a closer look at the abilities and limitations of ChatGPT see:

https://www.anaconda.com/blog/the-abilities-and-limitations-of-chatgpt

What it can do:

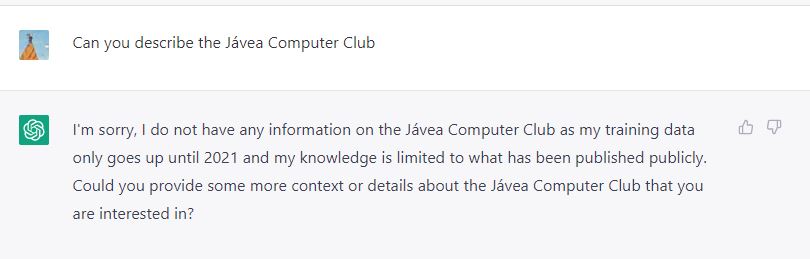

It answers questions – and often gets the answers wrong! A few days before the presentation Chris asked it: Can you Describe the Jávea Computer Club – This was the answer:

On the day of the presentation it had changed to this…Part fact, part fiction. The fiction is highlighted:

The Javea Computer Club is a group of computer enthusiasts based in Javea, a coastal town in the province of Alicante, Spain. The club was founded in 2011 and provides a forum for members to share knowledge, learn from one another, and stay up-to-date with the latest developments in the world of technology. The Javea Computer Club organizes regular meetings and workshops where members can come together to discuss a wide range of topics related to computing and technology, including software, hardware, programming, and internet security. The club also offers technical support and advice to members who may be experiencing computer-related issues or problems.

In addition to its regular meetings and workshops, the Javea Computer Club also hosts social events, such as outings, picnics, and other activities, which allow members to get to know each other better and build relationships within the group. Overall, the Javea Computer Club is a great resource for anyone in the area who is interested in technology and wants to learn more about it in a friendly, supportive, and fun environment. “

We asked questions to find out about the recent earthquake in Turkey – Initially, it cited a quake in 1999, then when we tried again one in the 2000’s and finally it found the 2023 quake – but with out-of-date figures..

Note: later at home, Chris asked it about a parasite she had studied (information which is available in any parasitology textbook). ChatGPT wrote an authoritative-sounding but factually wrong account and it required three correcting prompts from Chris before it got it right….

This “mansplaining” authoritative spewing out of inaccurate and downright false information is dangerous. The thing writes so well, it sounds to us gullible humans, as if it knows what it is talking about – which it doesn’t!

Try it for yourself: Ask it questions on a topic you know all about and then you can see what a mess it can make.

It writes essays and articles and takes tests -. hence educators are worried about students cheating. In a blinded test, ChatGPT was judged to have passed graduate-level exams at the University of Minnesota at the level of a C+ student and at Wharton School of the University of Pennsylvania with a B to B- grade. It has apparently passed a US Law Bar exam.

This is just Hi-tech plagiarism. Teaching methods and objectives should be improved to inspire students to want to learn and understand. Also, new methods of testing desired skills must be developed.

It writes computer code from a description of what you want to do. It has access to a huge corpus of code. Programmers have reported it to be a very handy tool.

It translates.

—examples

Later, Chris challenged it to see if it would recognise the significance of palindromes in a Korean screenplay – it seemed to succeed but there are caveats in that the subject had been widely discussed on the internet.

Translation AI software can do the grunt work, but you need people to provide the insight. Chris cited three different human translations of the same Russian novel. All were different in tone and emotional impact – which one was closest to the author’s original vision? – could ChatGPT translate such a novel?

It creates content – e.g.

Chris asked it to write a poem about the Mediterranean – and then “regenerate” another one…here are the results. There are repetitive lines in the first attempt and a deadly punch line in the second! (Grade C)

ChatGPT works best when prompted with examples. “Tell me a joke like this one …”

It can be used to improve writing style…

I didn’t use it for this web page – and it shows! – CBJ

It can generate data in a table and extract data from spreadsheets

It explains words and concepts – and often gets them wrong. It does not (yet) say where it got its information from. This Is supposed to change with the ChatGPT integrated into the Bing search engine.

It can win games like “Diplomacy”

— but it says it will not replace Google Search

Some Weaknesses:

It’s based on a fixed neural network Artificial neural networks have a predefined model, where no further neurons or connections can be added or removed. This is unlike the neurones in human brains which are constantly making new connections and discarding old ones. We will have to wait for ChatGPT4 for an update.

It gives wrong answers – See above. It sometimes writes plausible-sounding but incorrect or nonsensical answers (hallucinating) – This can be very dangerous because it writes so well, people can easily be fooled.

It can go mad – It has been hacked and made crazy with amusing results – see below.

It is limited to its training data up to 2021 -Although browser add-ons like WebChatGTP enable it to connect to the Internet to use more recent information.

https://chrome.google.com/webstore/detail/webchatgpt-chatgpt-with-i/lpfemeioodjbpieminkklglpmhlngfcn

(More and more extensions are being written every day.)

Prone to biases – When the training data are biased – Chat GPT is too..research suggests that ChatGPT exhibits a pro-environmental, left-libertarian orientation ..on the other hand “In one instance, ChatGPT generated a rap indicating that women and scientists of colour were inferior to white and male scientists.”

Running and energy costs – it consumes huge amounts of electricity being hosted on large numbers of computer servers (by contrast human brains can survive happily on a couple of meals and a coffee every day)

Unstable – it’s often overloaded with so many people using it.

Implications in society: –

Cyber security – It can write authentic-looking phishing e-mails and websites

In Academia – It can write introductions and abstracts (good) and plagiarise with skill (bad).

Toxic and illegal content – It has deliberately been restricted in what it can say about illegal activities, like manufacturing drugs, bombs, and toxic content etc. Who decides what is toxic? Media report low-paid trainers in Kenya feeling traumatised by labelling nasty stuff as toxic for the training programme; but it has been tricked into describing how to make a bomb.

…it will make many office jobs more efficient by helping to write invoices or letters” said Bill Gates the Microsoft co-founder”

Could be “corrupted” by companies wishing to promote their products…”Which is the best car …”?’

Note: Humans have to be involved in training and setting the parameters for all this in the same way that AI used for creating art, music etc. requires input from human beings:

.https://impakter.com/art-made-by-ai-wins-fine-arts-competition/

Rival conversational AI. :

Bard – Google

https://blog.google/technology/ai/bard-google-ai-search-updates/

Bard messed up at a demo: Q: What new discoveries from the James Webb Space Telescope can I tell my 9 year old about?” Bard responded with a series of bullet points, including one that read: “JWST took the very first pictures of a planet outside of our own solar system.”

According to NASA, however, the first image showing an exoplanet — or any planet beyond our solar system — was actually taken by the European Southern Observatory’s Very Large Telescope nearly two decades ago, in 2004.

https://edition.cnn.com/2023/02/08/tech/google-ai-bard-demo-error/index.html

Ernie bot / Wenxin Yiyan – Baidu (China)

Search GPT – Korea

YaLM 2.0 – Russia

OPT (meta, Facebook)

Microsoft is powering its search engine, Bing with ChatGPT . This has yielded some interesting and embarrasing results:

How Bing’s AI Chatbot went bonkers over the Spanish prime minister’s ‘beard’

and

Microsoft’s new ChatGPT AI starts sending ‘unhinged’ messages to people – System appears to be suffering a breakdown as it ponders why it has to exist at all

https://www.independent.co.uk/tech/bing-microsoft-chatgpt-ai-unhinged-b2281802.html

It is being integrated into other Browsers e.g. Opera

https://www.theverge.com/2023/2/11/23595784/opera-browser-chatgpt-sidebar-ai

…and can be an add-on to Google Docs so that you have your own AI text editor. Looks useful for Google Sheets too..

https://workspace.google.com/marketplace/app/gpt_for_sheets_and_docs/677318054654

There are many AI chat projects and search engines being developed e.g. You.com

What are the Implications for the future? Destructive or constructive or both? Is it a danger to society or a boon? Will it be the next Great Leap Forward like the invention of the Internet was…?

Think about it: … Job types going obsolete – Automated marketing blurb – Journalism disappearing? – New tools, or loss of jobs in creative industries – More fake news – More polarisation in politics…etc.

Here’s a scary video !

ChatGPT is an AI-powered chatbot developed by OpenAI. OpenAI was co-founded by Elon Musk but in recent years he has been increasingly critical of the organization. So what is OpenAI how dangerous are they really? 0:00 – 3:45 Intro 3:45 – 9:04 Founding of OpenAI 9:05 – 12:59 Microsoft strategic investment 13:00 Dangers of OpenAI

Chris Betterton-Jones – Knowledge junkie